Parsing Text using DSL Grammar

We have a task to parse the following file:

6 pcs apple

12 pcs orange

3 pcs peach

custname : John Tanazaki

address : 5382 Foo Ave

address2 :

zip : 380802

city : Kawaguchi-shi

state : SA

country : JAPAN

phone :

custmail : [email protected]

shipby : priority

invoice : 1544

comment :

Our goal is to convert this file into JSON format:

{

"items": [

{

"item": "apple",

"quantity": 6

},

{

"item": "orange",

"quantity": 12

},

{

"item": "peach",

"quantity": 3

}

],

"custname": "John Tanazaki",

"address": "5382 Foo Ave",

"address2": "",

"zip": "380802",

"city": "Kawaguchi-shi",

"state": "SA",

"country": "JAPAN",

"phone": "",

"custmail": "[email protected]",

"shipby": "priority",

"invoice": "1544",

"comment": ""

}

The top 3 lines can change depending on what the customer bought, a line for each item type. The first thing that comes to mind is to step through line-by-line and use a long list of rules for each line. But this would be a nightmare - we will see why later.

There is a better way. We can treat this text block as a Domain Specific Language (DSL) and build grammar to parse it. The grammar is trying to reconstruct the original logic that went into constructing the text block. Usually, this is unknown and we need to reverse-engineer it. In our order example, we know that the first few lines will contain item:quantity relationship.

What is this Grammar that we speak of?

We will be using what's known as a variant of Backus-Naur Form (BNF) to describe context-free grammar. It turns out that BNF is used everywhere, e.g. GNU-Bison is based on BNF and used in many modern compilers.

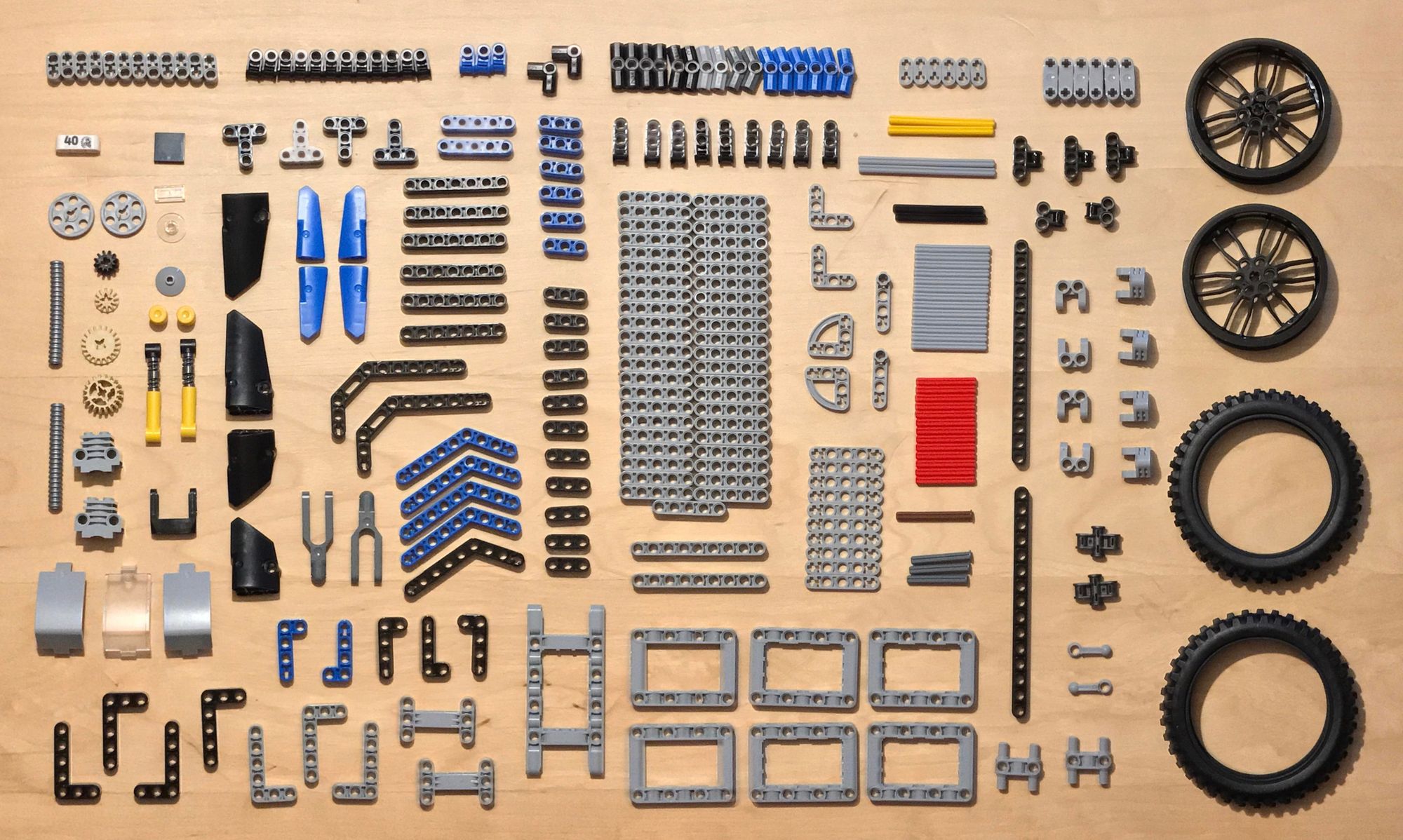

The parsing pipeline is composed of three major steps. The first step is to formally conduct a Lexical Analysis. Lexical analysis is a process of defining the types of lego bricks, so to speak, that we will be using to build the grammar. These are formally referred to as "tokens".

Semantic Parsing is the process of building an abstract syntax tree or AST. An AST is a representation of what the final lego build is comprised of. At the root would be the final build and the leaves would be each of the "knolled" lego pieces.

And the last part is Assembly. This is the process of traversing the AST and doing something with each node. In the case of compilers, each node would represent the node operation, an assembly language instruction.

Lexical Analysis

In the example order, the "knolling" of tokens involves determining the fundamental building blocks of the grammar. They are as follows:

| Token description | Token Name | Token Values |

|---|---|---|

| New lines | newline |

[\r\n]+ |

| White space (excluding newlines) | ws |

[^\S\r\n] |

| Value (any text) | value |

.* |

| Key | key |

'custname' / 'address2' / 'address' / 'zip' / 'city' / 'state' / 'country' / 'phone' / 'custmail' / 'shipby' / 'comment' / 'invoice' / 'printed' |

| Key/Value separator | colon |

: |

| Quantity | quantity |

\d+ |

Using these fundamental tokens we can build more complex tokens that build up the entire text block as follows:

| Token description | Token Name | Token Values |

|---|---|---|

| Record | record |

key ws colon ws value newline |

| Item | item |

quantity ws "pcs" ws value newline |

| Items | items |

item + |

| File | file |

(newline / items / record ) + |

Here, the / means OR operator. The rest of the syntax is standard Regular Expressions.

Parsimonious library for Python (EBNF Parser)

To build DSL grammar, we can use Parsimonious, a python library that is based on Parsing Expression Grammar (PEGs). Quoting the docs:

PEG parsers don't draw a distinction between lexing and parsing; everything is done at once. As a result, there is no lookahead limit, as there is with, for instance, Yacc. And, due to both of these properties, PEG grammars are easier to write: they're basically just a more practical dialect of EBNF. With caching, they take O(grammar size * text length) memory (though I plan to do better), but they run in O(text length) time.

PEGs can describe a superset of LL(k) languages, any deterministic LR(k)language, and many others—including some that aren't context-free (http://www.brynosaurus.com/pub/lang/peg.pdf). They can also deal with what would be ambiguous languages if described in canonical EBNF. They do this by trading the|alternation operator for the/operator, which works the same except that it makes priority explicit:a / b / cfirst tries matchinga. If that fails, it triesb, and, failing that, moves on toc. Thus, ambiguity is resolved by always yielding the first successful recognition.

The syntax for building tokens is based on the simplified Extended Backus-Naur Format (EBNF). The syntax for the tokens is detailed in README.

Abstract Syntax Tree (AST)

The token relationship can be described as an AST, starting with file as the root node:

All nodes are broken down into fundamental building blocks. I've added a newline node to the file since there can be empty lines at the end of the file. This node will return None as we will see in the next section. The tree can be represented using Parsimonious syntax in Python as follows:

from parsimonious.grammar import Grammar

grammar = Grammar(

r"""

file = (newline / items / record) +

items = item +

item = quantity ws "pcs" ws value newline

quantity = ~"\d+"

record = key ws colon ws value newline

colon = ~":"

key = 'custname' / 'address2' / 'address' / 'zip' / 'city' / 'state' / 'country' / 'phone' / 'custmail' / 'shipby' / 'comment' / 'invoice' / 'printed'

value = ~".*"i

ws = ~"[^\S\r\n]*"

newline = ~"[\r\n]+"

"""

)

Assembly

We can use the parsimonious library to "assemble" the nodes and create a JSON object. We first create a class call Order that inherits from NodeVisitor Parsimonious class. Each node in the tree gets a method with a visit_ prefix. For example, for node item, we need to define visit_item method.

The full Order class is defined as follows:

from parsimonious import NodeVisitor

class Order(NodeVisitor):

def visit_file(self, node, visited_children):

""" Assembles items and records for a file """

result = {'items': []}

for i in visited_children:

if i[0] is None:

continue

try:

result.update(dict(i))

except Exception as e:

print(i)

print(e)

pass

return result

def visit_newline(self, node, visited_children):

""" Newline node does nothing and returns None """

return None

def visit_items(self, node, visited_children):

""" Constructs a dictionary for each item from items """

return 'items', [{'item': p, 'quantity': q} for p, q in visited_children]

def visit_item(self, node, visited_children):

""" Creates a tuple of item, quantity """

quantity, _, _, _, product, _ = visited_children

print(f'ITEM: {product.text} : {quantity.text}')

return product.text, int(quantity.text)

def visit_record(self, node, visited_children):

""" Assembles a record (tuple) of key, values """

key, _, _, _, value, *_ = node.children

print(f'RECORD: {key.text} : {value.text}')

return key.text, value.text

def visit_value(self, node, visited_children):

""" Returns a value as is """

return node

def generic_visit(self, node, visited_children):

""" Returns all """

return visited_children or node

We can now convert to JSON :

import json

order = Order()

tree = grammar.match(data)

output = order.visit(tree)

output_json = json.dumps(output)

print(output_json)

Which prints our JSON order object:

{

"items": [

{

"item": "apple",

"quantity": 6

},

{

"item": "orange",

"quantity": 12

},

{

"item": "peach",

"quantity": 3

}

],

"custname": "John Tanazaki",

"address": "5382 Foo Ave",

"address2": "",

"zip": "380802",

"city": "Kawaguchi-shi",

"state": "SA",

"country": "JAPAN",

"phone": "",

"custmail": "[email protected]",

"shipby": "priority",

"invoice": "1544",

"comment": ""

}

Why not rule-based line-by-line parsing?

One must wonder - why write grammar when we can simply put some rules in a for loop that can traverse the file line-by-line?

Let's imagine our text block order format has evolved overtime and now there is a category field.

fruits:

6 pcs apple

12 pcs orange

3 pcs peach

vegetables:

3 pcs onions

5 pcs carrots

custname : John Tanazaki

address : 5382 Foo Ave

address2 :

zip : 380802

city : Kawaguchi-shi

state : SA

country : JAPAN

phone :

custmail : [email protected]

shipby : priority

invoice : 1544

comment :

which should parse as:

{

"items": {

"fruits": [

{

"item": "apple",

"quantity": 6

},

{

"item": "orange",

"quantity": 12

},

{

"item": "peach",

"quantity": 3

}

],

"vegetables": [

{

"item": "onions",

"quantity": 3

},

{

"item": "carrots",

"quantity": 5

}

]

},

"custname": "John Tanazaki",

"address": "5382 Foo Ave",

"address2": "",

"zip": "380802",

"city": "Kawaguchi-shi",

"state": "SA",

"country": "JAPAN",

"phone": "",

"custmail": "[email protected]",

"shipby": "priority",

"invoice": "1544",

"comment": ""

}

It is easy to modify the original AST with the following grammar:

grammar = Grammar(

r"""

file = (newline / categories / items / record) +

categories = category +

category = key_cat ws colon ws newline items

items = ws item +

item = ws quantity ws "pcs" ws value newline

quantity = ~"\d+"

record = key ws colon ws value newline

colon = ~":"

key_cat = 'fruits' / 'vegetables'

key = 'custname' / 'address2' / 'address' / 'zip' / 'city' / 'state' / 'country' / 'phone' / 'custmail' / 'shipby' / 'comment' / 'invoice' / 'printed'

value = ~".*"i

ws = ~"[^\S\r\n]*"

newline = ~"[\r\n]+"

"""

)

And modify the Order class as:

class Order(NodeVisitor):

def visit_file(self, node, visited_children):

""" Assembles items and records for a file """

""" Returns file """

result = {}

for i in visited_children:

if i[0] is None:

continue

try:

result.update(dict(i))

except Exception as e:

print(i)

print(e)

pass

return result

def visit_newline(self, node, visited_children):

""" Newline node does nothing and returns None """

return None

def visit_categories(self, node, visited_children):

""" Creates a tuple of items and dictionary of categories """

return 'items', dict(visited_children)

def visit_category(self, node, visited_children):

""" Creates a tuple of category and list of items """

key, _, _, _, _, items = visited_children

print(f'CATEGORY: {key[0].text} : {items[1]}')

return key[0].text, items[1]

def visit_items(self, node, visited_children):

""" Constructs a dictionary for each item from items """

return 'items', [{'item': p, 'quantity': q} for p, q in visited_children[1]]

def visit_item(self, node, visited_children):

""" Creates a tuple of item, quantity """

_, quantity, _, _, _, product, _ = visited_children

print(f'ITEM: {product.text} : {quantity.text}')

return product.text, int(quantity.text)

def visit_record(self, node, visited_children):

""" Assembles a record (tuple) of key, values """

key, _, _, _, value, *_ = node.children

print(f'RECORD: {key.text} : {value.text}')

return key.text, value.text

def visit_value(self, node, visited_children):

""" Returns a value as is """

return node

def generic_visit(self, node, visited_children):

""" Returns all """

return visited_children or node

It's possible to parse it line-by-line but it will get laborious, erroneous, and incredibly fragile to maintain and debug. Lexical analysis and semantic parsing is a very powerful tool, one to have in your pocket when there is a need for parsing weirdly formatted log files, text blobs, or even building your own mock compiler for learning purposes.

The end.